Floating and fixed point arithmetic

A user posted a question on the Farseer Physics Engine forums about fixed point math. The topic include numeral systems, implementation details and performance. A whole lot of posts have been written about it. I’m not going to provide a full introduction to fixed point math, I’m simply going to provide an abstract answer to the questions (one that apply to similar questions).

Background

Back in the good old days (before the Intel i486 CPU) all applications used fixed point arithmetic. Floating point was something new where you could get a tradeoff between space, value range and precision, but at the cost of performance.

Let us take a quick example: A fixed point number with the radix (point/dot) after the forth digit: 5186.3924, would be represented with a floating point as 51863924 with an exponent of 3:

- 5.1863924*10^3 = 5186.3924

Let us have a look at the up’s and down’s of floating point math.

Positive side of floating point

There are both positive and negative sides of floating point arithmetic. It is basically a tradeoff that the programmer needs to balance; choosing the lesser of two evils.

Greater range and precision

Floating point numbers have a greater range of number representations. You can’t write 0.00001234567 and 12.34567 with fixed point. The radix is fixed! You can with floating point and that is one of the main reasons we use floating point over fixed point. Let us take a look at the following example from Wikipedia:

In a decimal floating-point system with three digits, the multiplication that humans would write as

- 0.12 × 0.12 = 0.0144

would be expressed as

- (1.2 × 10−1) × (1.2 × 10−1) = (1.44 × 10−2).

In a fixed-point system with the decimal point at the left, it would be

- 0.120 × 0.120 = 0.014.

A digit of the result was lost because of the inability of the digits and decimal point to 'float' relative to each other within the digit string.

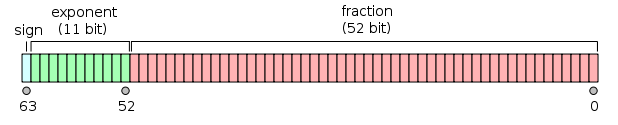

Since floating point numbers are split up into 2 numbers: Significant and exponent, it makes sense that the range of floating point numbers is defined by the number of bits allocated to the significant and exponent. There are different types of floating point data types available:

- Single – Single precision with 32 bits. Called single or float in C#.

- Range: ±1.5 × 10−45 to ±3.4 × 1038

- Precision: 7 digits

- Double – Double precision with 64 bits. Called double in C#.

- Range ±5.0 × 10−324 to ±1.7 × 10308

- Precision: 15-16 digits

There are even 128 bit versions with a higher range and precision.

Negative side of floating point

Floating point numbers comes at a cost. One is space, but that is neglectable in today’s computers with the high amount of RAM and huge bandwidth. More important is accuracy and performance of the arithmetic operations associated with floating point.

Performance

As mentioned before, the i486 CPU was the first CPU to get an FPU and thus the floating point operations were no longer emulated (or run via a coprocessor). This sped up the operations by a large factor. Nearly all CPUs today have an FPU located inside of them. Graphics cards are also one big FPU – it specializes in floating point math and can process a huge amount of floating point numbers.

So what is the problem?

Well, as the forum post points out, some devices does not have a FPU and even if they do, their floating point operations can be magnitudes slower than fixed point operations. A good example is the Xbox 360 platform. It does have an FPU and it even has the AltiVec instruction set. But the software designed for the Xbox 360 (the .Net Compact Framework - NetCF for short) does not utilize those instructions. This means we have lower floating point throughput on the Xbox360.

Accuracy

Floating point numbers have a bad reputation of being inaccurate. Floating point are not able to represent numbers like π or 0.1 correctly and inaccuracies may create inconsistency in your application. Lets take an example:

If you take the sine of pi, then it should yield zero: sin(π) = 0

That is not the case with most (if not all) floating point implementations. sin(π) in C# yield the following result with double precision:

- 1.22460635382238-16

Equality checks are also a cause of concern. If you check a condition like sin(π) = 0 then it will yield false even though it is mathematically true. Normally we use epsilon and check the equality up against that.

The crazy part of this is that the floating point standard is not the same on different platforms. The implementation on an Intel CPU might be different from an AMD CPU. The differences can also sometimes lie in the operation system – further fueling the inaccuracies across platforms.

The solution

There are no perfect solution. As mentioned before it is a tradeoff and sometimes floating point is the way to go. However, there are some alternatives.

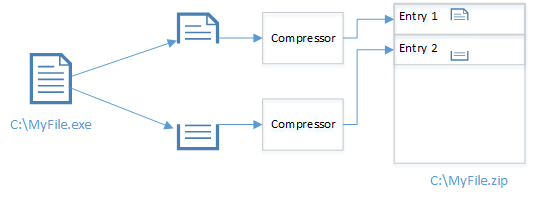

Fixed point

Fixed point math is based on simple integers. You can represent the number 1.200 by the value of 1200 and a scaling factor of 100. Most fixed point libraries implement a fixed point version of integer and then use bit-shifting to scale the values.

Fixed point math has some advantages over floating point:

- Same behavior on all platforms (deterministic)

- Faster on platforms without FPU (and sometimes even with FPU)

- Lower space usage

But as always, there is a downside too:

- Complex implementations

- Lower range of values

- Overhead of constantly scaling values

Double precision

Double precision floating point data types are 64bit and have a higher precision than normal 32bit floating point. This is not really a solution as it simply shifts the problem to a whole other dimension. It might lower the impact of the problem, but it does not go away. Sometimes it is sufficient to switch to doubles and it is far easier to do than converting everything to fixed point math.

This solution only works on platforms with an FPU as it will run even slower than single precision floats.

Comments

Post a Comment