Simulators And Determinism

When working with simulators, it is important to understand determinism and why we have trouble with determinism across different platform and configurations. Simulators that are iterative converge their solution over multiple iterations and can accumulate system-inherited errors and thus can become unstable over time.

Why is determinism important

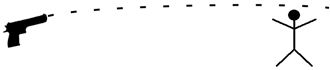

Does determinism in simulation systems even matter? The short answer is yes, very important. The long answer is that simulations systems usually base their current world on the previous world. It takes one step at the time (time step) and calculate everything based on the previous step. If you shoot a projectile towards a target it, the trajectory of the projectile will travel in a certain curve. If you run the same simulation on a different platform, the projectile might end up somewhere totally different than the first simulation.

Take the following example with a projectile trajectory. They both have the same initial conditions.

Simulation on platform 1:

Simulation on platform 2:

You would expect the results to be the same on both platforms, but they are not.

What can affect determinism

There are several things that can affect the determinism of a simulation, the order of elements, the rounding of floats, the low level instructions and much more. Here is a list of some examples on what can impact determinism:

- Sorting

- Stable vs. unstable sorting

- Float operations

- Rounding methods

- Optimizations

- Hardware instructions

- Some hardware has higher accuracy than others.

The list is by no means complete, there are hundreds of factors that can affect determinism; the list only contains those we focus on in this post.

Sorting

In Farseer Physics Engine we sort the contacts (where two shapes touch) by the amount two shapes overlap. This way we can resolve the deepest contacts first and get a more realistic simulation. We used to use Array.Sort() that is based on quick sort, but quick sort is not a stable sorting algorithm. Stable in this case just means that the order of elements are preserved if the keys that are equal are in the same order before and after sorting. If two contacts had the same amount of penetration, they might get shuffled around and the order of IDs will be out of order. We switched to an insertion sort algorithm instead and suddenly the whole simulation acted differently. Not a big problem since it would do that across all platforms, but simulations recorded from previous version of the physics simulator did not match newer versions anymore.

Floating point operations

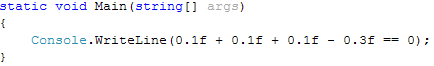

Floats can be tricky to work with. I took the following example from this article on floating points in .net:

You would guess that the program always return true - but that is not the case. If compiled under release mode (to enable optimizations) and x86, it will return false. Even though you can represent two values exact, the result of an operation on the values might not be exact. There is a standard for floating point operations, but it allows different platforms to return different values. This means that even if two systems follow the same standard, they might return different values. You can always be sure that the same value is always returned from a float operation on the same platform.

Let us look at what happens under the hood.

The float operations are stored in a 80 bit register while the memory is only 64 bit. The value gets calculated with high precision inside the register, but gets truncated in the register. Some compilers optimize your code in such a way that the value in the register gets compared to the value in memory. Since the memory value is truncated, it is not equal to the non-truncated value. This is what happens in the C# example above. To combat this we can do some tricks to disable float optimizations (by making the float not eligible to the optimization), but the simplest thing is to check if the float is in the range of epsilon. More on this further down.

Hardware Instructions

Let us take the fused multiply-accumulate instruction as an example. This instruction can be found in IBM PowerPC or Intel Itanium processors that can compute ax±b with a single rounding instead of multiple. This increase accuracy (and performance because of the fewer rounding instructions) and thus a simulation will run with higher accuracy on the PowerPC or Intel Itanium platform compared to the PC platform.

This is just one out of many instructions. Some platforms have lower accuracy some have higher. If you develop a simulation that runs on PC, make sure to test it on other platforms if you officially support them.

What can we do about it

Now that we know some of the factors that influence the determinism of a simulation we can implement some techniques to increase the determinism.

The first thing is obvious, use stable sorting methods, it is as simple as that. Anything float related is a little more complicated. There are 3 methods of getting more accuracy:

- Use doubles

- Use decimal

- Epsilon

- Don’t use floats

Double and decimal

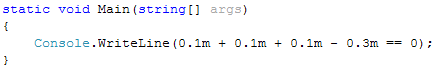

Using doubles simply just shift the problem. It might now be as visible as before, but it is still there. Floats have a precision of 7 digits while double have 15-16 digits. Decimal on the other hand is very accurate and modeled in such a way that no errors are involved. (They have a precision of 28-29 digits by the way). Take a look at the following example:

The code above will write false for reasons described earlier in this post. The same code using the decimal data type:

The above example will return true, just like we would expect. Performance of decimals are not as high as with floats or integers because of the added precision. But places where accuracy is paramount, it might be the only solution.

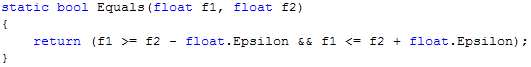

Epsilon

Epsilon is the smallest positive float value greater than zero (in .net) and can be used to check the equality between two floats, even after they have been rounded.

Using this will always make the equality check between two floats return the correct value.

Fixed point

The last thing is to simply not use floats and use integers instead. This is also called fixed point arithmetic. In fixed point math you have fixed number of digits after the radix point. It is basically an integer that you scale using some scale factor. Take the number 1.595 as an example. That is the same as 1595/1000 – so 1000 is the scale factor here. Not only can this improve accuracy, but it can also improve performance on devices without a FPU.

Conclusion

Use stable sorting methods if the order of elements are important and make sure to take great care with floats in algorithms that are sensitive to small errors. Use doubles if you simply need more precision or use fixed point math if you need exact values. Floating point values are not evil, they are a trade off between performance an accuracy, but you might need to do equality checks in places where you do float operations.

Comments

return Abs(float1 - float2) <= epsilon;

But perhaps even more useful is relative tolerance:

return Abs(float1 - float2) <= epsilon * Max(Abs(float1), Abs(float2));

Post a Comment